Autonomous Facial Recognition Turret (2025)

A 3D printed robotics project I designed and made using an internal Raspberry Pi. View the YouTube Video below for a full explanation on what I achieved and how it was done.

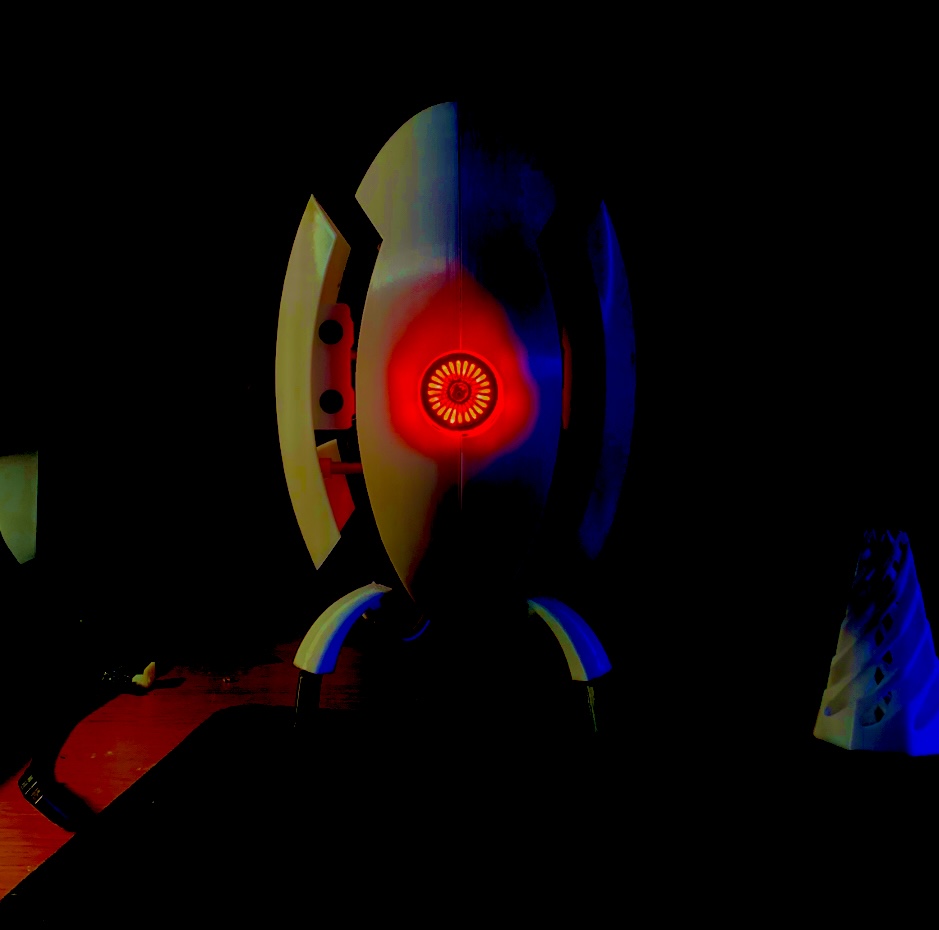

Uses a locally hosted facial recognition algorithm to determine if a person is authorised, and if not, 'engages' the intruder with flashing lights and shooting sounds. This project is a real life imitation of the infamous Portal turret, and uses its voicelines, but as opposed to its videogame counterpart, this one is much smaller, and less dangerous, being shrunk down to a cute table top form factor.

Gallery:

Project report, code, relevant resources and attributions will be included here.

Code:

from picamera2 import Picamera2 import cv2 import numpy as np import time import face_recognition import pickle import os from gpiozero import LED from gpiozero import Button import math import pigpio import subprocess import random

#os.environ['PULSE_SERVER'] = 'unix:/run/user/$(id-u)/pulse/native' time.sleep(5) import pygame subprocess.run(['sudo','pigpiod']) time.sleep(1) pi = pigpio.pi() #print(os.environ) os.chdir('/home/turret2/Face_Recognition')

#Define pins basepin = 18 #1650 neutral , 1150 min, 2150 max wingpin = 17 rightled = LED(16) leftled = LED(12) button = Button(25)

#Configure pin behaviour pi.set_mode(buttonpin, pigpio.INPUT) pi.set_pull_up_down(buttonpin, pigpio.PUD_UP)

Load pre-trained face encodings

print("[INFO] loading encodings...") with open("encodings.pickle", "rb") as f: data = pickle.loads(f.read()) known_face_encodings = data["encodings"] known_face_names = data["names"]

cv_scaler = 4 # this has to be a whole number

face_locations = [] face_encodings = [] face_names: list[str] = [] frame_count = 0

fps = 0 authorised_names = ["Theo"]

kernel = np.ones((3, 3), np.uint8)

x_resolution = 4000 y_resolution = 2160

picam2 = Picamera2() picam2.configure(picam2.create_preview_configuration(main={"format": 'XRGB8888', "size": (x_resolution, y_resolution)})) picam2.start() #initialise audio

pygame.mixer.init() #sound = pygame.mixer.Sound("path_to_your_sound_file.wav") #sound.play() #pygame.time.wait(int(sound.get_length() * 1000)) #pygame.quit() noise_silent = pygame.mixer.Sound("turretaudio/silent_half-second.wav") Turret_active = pygame.mixer.Sound("turretaudio/Turret_active.wav") Turret_alarm = pygame.mixer.Sound("turretaudio/Turret_alarm.wav") Turret_deploy = pygame.mixer.Sound("turretaudio/Turret_deploy.wav") Turret_ping = pygame.mixer.Sound("turretaudio/Turret_ping.wav") Turret_retract = pygame.mixer.Sound("turretaudio/Turret_retract.wav") Turret_turret_fire_4x_03 = pygame.mixer.Sound("turretaudio/Turret_turret_fire_4x_03.wav")

background_music = [ "turretaudio/Portal2-16-Hard_Sunshine.mp3", "turretaudio/Portal2-02-Halls_Of_Science_4.mp3", "turretaudio/Portal2-03-999999.mp3", "turretaudio/Portal2-03-FrankenTurrets.mp3", "turretaudio/Portal2-06-TEST.mp3", "turretaudio/Portal2-09-The_Future_Starts_With_You.mp3", "turretaudio/Portal2-10-There_She_Is.mp3", "turretaudio/Portal2-13-Music_Of_The_Spheres.mp3", "turretaudio/Portal2-14-You_Are_Not_Part_Of_The_Control_Group.mp3", "turretaudio/Portal2-15-Space_Phase.mp3", "turretaudio/Portal2-17-Robot_Waiting_Room_#1.mp3", "turretaudio/Portal2-18-Robot_Waiting_Room_#2.mp3" ]

sound_active = [ pygame.mixer.Sound("turretaudio/Turret_turret_active_1.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_active_2.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_active_4.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_active_6.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_active_7.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_active_8.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_suppress_1.wav") ]

sound_search = [ pygame.mixer.Sound("turretaudio/Turret_turret_autosearch_1.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_autosearch_2.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_autosearch_4.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_autosearch_5.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_autosearch_6.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_search_4.wav") ]

sound_deploy = [ pygame.mixer.Sound("turretaudio/Turret_turret_deploy_1.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_deploy_2.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_deploy_4.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_deploy_5.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_deploy_6.wav") ]

sound_sleep = [ pygame.mixer.Sound("turretaudio/Turret_turret_retire_1.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_retire_2.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_retire_4.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_retire_5.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_retire_6.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_retire_7.wav"), pygame.mixer.Sound("turretaudio/Turret_turretshotbylaser09.wav") ]

sound_targetlost = [ pygame.mixer.Sound("turretaudio/Turret_turret_search_1.wav"), pygame.mixer.Sound("turretaudio/Turret_turret_search_2.wav") ]

pygame.mixer.music.load(random.choice(background_music)) #selected_track = random.choice(background_music) #pygame.mixer.music.load(selected_track) pygame.mixer.music.set_volume(0.15) pygame.mixer.music.play(-1)

number_of_faces = 0 target_face_center_x = 400 authorised_face_detected = False

def process_frame(frame): global face_locations, face_encodings, face_names, number_of_faces, target_face_center_x, authorised_face_detected

rotated_frame = np.flipud(np.fliplr(frame))

# Resize the frame using cv_scaler to increase performance (less pixels processed, less time spent)

resized_frame = cv2.resize(rotated_frame, (0, 0), fx=(1/cv_scaler), fy=(1/cv_scaler))

# Convert the image from BGR to RGB colour space, the facial recognition library uses RGB, OpenCV uses BGR

rgb_resized_frame = cv2.cvtColor(resized_frame, cv2.COLOR_BGR2RGB)

# Find all the faces and face encodings in the current frame of video

face_locations = face_recognition.face_locations(rgb_resized_frame)

face_encodings = face_recognition.face_encodings(rgb_resized_frame, face_locations, model='large')

face_names = []

authorised_face_detected = False

for face_encoding in face_encodings:

# See if the face is a match for the known face(s)

matches = face_recognition.compare_faces(known_face_encodings, face_encoding)

name = "Unknown"

# Use the known face with the smallest distance to the new face

face_distances = face_recognition.face_distance(known_face_encodings, face_encoding)

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = known_face_names[best_match_index]

# Check if the detected face is in our authorised list

if name in authorised_names:

authorised_face_detected = True

face_names.append(name)

# Calculate the number of faces detected

if face_locations: # Ensure at least one face is detected

top, right, bottom, left = face_locations[0] # Use the first detected face

target_face_center_x = (left + right) // 2 # Calculate the x-coordinate of the center

number_of_faces = len(face_locations) # Calculate the number of faces detected

else:

number_of_faces = 0

print(number_of_faces)

return frame

def motion_detected(threshold=30, min_area=200): # Initialize the Picamera2 object inside the function picam2 = Picamera2() picam2.configure(picam2.create_preview_configuration(main={"size": (640, 480)})) picam2.start()

# Capture the first frame

frame1b = picam2.capture_array()

# Capture the second frame for comparison

time.sleep(0.1) #waits so that there will be a difference between photos

frame2b = picam2.capture_array()

frame1 = cv2.resize(frame1b, (0, 0), fx=(1/cv_scaler), fy=(1/cv_scaler))

# Capture the second frame for comparison

time.sleep(0.1) #waits so that there will be a difference between photos

frame2 = cv2.resize(frame2b, (0, 0), fx=(1/cv_scaler), fy=(1/cv_scaler))

# Convert frames to grayscale and blur them

gray1 = cv2.GaussianBlur(cv2.cvtColor(frame1, cv2.COLOR_BGR2GRAY), (21, 21), 0)

gray2 = cv2.GaussianBlur(cv2.cvtColor(frame2, cv2.COLOR_BGR2GRAY), (21, 21), 0)

# Compute absolute difference between the frames

diff = cv2.absdiff(gray1, gray2)

# Apply a threshold to highlight the difference

_, thresh = cv2.threshold(diff, threshold, 255, cv2.THRESH_BINARY)

# Find contours to detect motion

contours, _ = cv2.findContours(cv2.dilate(thresh, kernel, iterations=2), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Check for large contours (motion)

motion_status = any(cv2.contourArea(c) > min_area for c in contours)

# Return whether motion was detected

return motion_status

def full_target_spray():

frequency = 0.5 # Frequency in Hz

time_point = 1.0 # Example time in seconds

start_time = time.time()

ishot=0

leftled.blink(on_time=0.03, off_time=0.04)

rightled.blink(on_time=0.03, off_time=0.04)

while time.time() - start_time < 4: # 2 seconds timer

if ishot == 7:

pygame.mixer.Sound(Turret_turret_fire_4x_03).play()

ishot=0

sinbasepulsewidth = 500 * math.sin(2 * math.pi * frequency * time.time()) + 1650

print(sinbasepulsewidth)

pi.set_servo_pulsewidth(basepin, sinbasepulsewidth) #rotates to target

time.sleep(0.005)

ishot = ishot + 1

def single_target_shot():

basepulsewidth = 1150 + (target_face_center_x / x_resolution) * (2150 - 1150)

pi.set_servo_pulsewidth(basepin, basepulsewidth) #rotates to target

print(basepulsewidth)

for i in range(3):

pygame.mixer.Sound(Turret_turret_fire_4x_03).play()

for _ in range(4):

rightled.on()

leftled.on()

time.sleep(0.03)

rightled.off()

leftled.off()

time.sleep(0.04)

def state_friendly():

pygame.mixer.Sound(Turret_ping).play()

retract_wings()

time.sleep(10)

def deploy_wings():

print("deployingwing")

pi.set_servo_pulsewidth(wingpin, 1500) #start with neutral movement (prevents jitter movement)

time.sleep(0.3)

pygame.mixer.Sound(Turret_deploy).play()

pi.set_servo_pulsewidth(wingpin, 1300) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1200)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1100)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1000)

time.sleep(0.4)

pi.set_servo_pulsewidth(wingpin, 1100)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1200)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1300)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1500) #stop movement

time.sleep(0.3)

def retract_wings(): print("retractwing")

pi.set_servo_pulsewidth(basepin, 1650) #Centers base and waits 0.5s before retracting

time.sleep(0.5)

pi.set_servo_pulsewidth(wingpin, 1500) #start with neutral movement (prevents jitter movement)

time.sleep(0.3)

pygame.mixer.Sound(Turret_retract).play()

pi.set_servo_pulsewidth(wingpin, 1700) #retract wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1800)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1900)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 2000)

time.sleep(0.5)

if button.is_pressed:

pi.set_servo_pulsewidth(wingpin, 1500) #stop movement

time.sleep(0.3)

else:

pi.set_servo_pulsewidth(wingpin, 1900)

time.sleep(0.1)

if button.is_pressed:

pi.set_servo_pulsewidth(wingpin, 1500) #stop movement

time.sleep(0.3)

else:

pi.set_servo_pulsewidth(wingpin, 1800)

time.sleep(0.1)

if button.is_pressed:

pi.set_servo_pulsewidth(wingpin, 1500) #stop movement

else:

pi.set_servo_pulsewidth(wingpin, 1700)

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1500) #stop movement

time.sleep(0.3)

def state_targetlost(): print("retractwing - target lost") pygame.mixer.Sound(random.choice(sound_targetlost)).play()

pi.set_servo_pulsewidth(basepin, 1650) #Centers base and waits 0.5s before retracting

time.sleep(1.5)

pi.set_servo_pulsewidth(wingpin, 1500) #deploys wings

time.sleep(0.3)

pygame.mixer.Sound(Turret_retract).play()

pi.set_servo_pulsewidth(wingpin, 1700) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1800) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1900) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 2000) #deploys wings

time.sleep(0.4)

pi.set_servo_pulsewidth(wingpin, 1900) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1800) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1700) #deploys wings

time.sleep(0.1)

pi.set_servo_pulsewidth(wingpin, 1500) #deploys wings

time.sleep(0.3)

#play target lost voiceline

start_time = time.time()

while time.time() - start_time < 2: # 2 seconds timer

pi.set_servo_pulsewidth(wingpin, 500) #retract wings

if button.is_pressed:

print("Button pressed!")

break

while True: print("program started") while True:

motiondetect = motion_detected time.sleep(0.5) if motiondetect() == 1:

pygame.mixer.Sound(random.choice(sound_deploy)).play() deploy_wings() print("motion detected") break if motiondetect() == 0:

pygame.mixer.Sound(noise_silent).play() #pygame.mixer.Sound(noise_silent).play() #keeps audio channel active print("waiting for motion")

for i in range(5): #attempts 5 times to find a face, if not, goes to sleep

time.sleep(0.6)

pygame.mixer.Sound(noise_silent).play() #keeps audio channel active

framein = picam2.capture_array()

_ = process_frame(framein)

print(authorised_face_detected, number_of_faces, target_face_center_x)

if number_of_faces > 0:

print("Face detected")

if authorised_face_detected == True: #if target is authorised

print("friend detected")

state_friendly()

else: #if target is not authorised

if number_of_faces > 1:

while True:

print("opps detected")

full_target_spray()

framein = picam2.capture_array()

_ = process_frame(framein)

print(authorised_face_detected, number_of_faces, target_face_center_x)

if authorised_face_detected or number_of_faces == 0:

print("target no longer visible, or authorised face prevents further action")

break

retract_wings()

else:

while True:

print("opp detected")

single_target_shot()

framein = picam2.capture_array()

_ = process_frame(framein)

print(authorised_face_detected, number_of_faces, target_face_center_x)

if authorised_face_detected or number_of_faces != 1:

print("target no longer visible, or authorised face prevents further action")

break

else:

if number_of_faces > 0:

full_target_spray()

retract_wings()

pygame.mixer.Sound(random.choice(sound_sleep)).play()

break

else:

#if no targets can be found despite 5 face checks

print("no faces detected")

state_targetlost()

time.sleep(1.5)

pygame.mixer.Sound(random.choice(sound_sleep)).play()

LICENCES and ATTIBUTIONS:

MIT License

Copyright (c) 2021 Adam Geitgey

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

MIT License

Copyright (c) 2021 Caroline Dunn

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

Stump-based 24x24 discrete(?) adaboost frontal face detector.

Created by Rainer Lienhart.

////////////////////////////////////////////////////////////////////////////////////////

IMPORTANT: READ BEFORE DOWNLOADING, COPYING, INSTALLING OR USING.

By downloading, copying, installing or using the software you agree to this license.

If you do not agree to this license, do not download, install,

copy or use the software.

Intel License Agreement

For Open Source Computer Vision Library

Copyright (C) 2000, Intel Corporation, all rights reserved.

Third party copyrights are property of their respective owners.

Redistribution and use in source and binary forms, with or without modification,

are permitted provided that the following conditions are met:

* Redistribution's of source code must retain the above copyright notice,

this list of conditions and the following disclaimer.

* Redistribution's in binary form must reproduce the above copyright notice,

this list of conditions and the following disclaimer in the documentation

and/or other materials provided with the distribution.

* The name of Intel Corporation may not be used to endorse or promote products

derived from this software without specific prior written permission.

This software is provided by the copyright holders and contributors "as is" and

any express or implied warranties, including, but not limited to, the implied

warranties of merchantability and fitness for a particular purpose are disclaimed.

In no event shall the Intel Corporation or contributors be liable for any direct,

indirect, incidental, special, exemplary, or consequential damages

(including, but not limited to, procurement of substitute goods or services;

loss of use, data, or profits; or business interruption) however caused

and on any theory of liability, whether in contract, strict liability,

or tort (including negligence or otherwise) arising in any way out of

the use of this software, even if advised of the possibility of such damage.